Yunhao (Andy) Ge 葛云皓

Research Scientist @ NVIDIA

Email: yunhaog at nvidia dot com

Yunhao (Andy) Ge 葛云皓Research Scientist @ NVIDIA

Email: yunhaog at nvidia dot com |

|

I am a Research Scientist at NVIDIA's Generalist Embodied Agent Research, working on Project Groot. I have broad research interests in Multimodal Foundation model and Robotics, with a recent focus on building generally capable agents based on world foundation models. I was previously a Research Scientist at NVIDIA's Deep Imagination Research, where I worked on NVIDIA Cosmos. I received my Ph.D. in Computer Science from University of Southern California advised by Prof. Laurent Itti, and was honored with the Amazon ML Fellowship. I was a Visiting Ph.D. Student at Stanford Vision and Learning Lab (SVL) advised by Prof. Jiajun Wu.

[2026/02/04] We have released DreamZero, a World Action Model (WAM) that enables zero-shot generalization to unseen tasks and enables few-shot embodiment learning!

[2026/1/22] We have released Cosmos Policy, a state-of-the-art robot policy built on a video diffusion model backbone (Cosmos)!

[2025/12/15] We have released I-Scene: 3D Instance Models are Implicit Generalizable Spatial Learners!

[2025/10/28] We have released Cosmos Predict2.5 and Cosmos-Transfer2.5, an improved world foundation model platform for physical AI!

[2025/03/18] We have released Cosmos Transfer1, a world-to-world transfer model designed to bridge the perceptual divide between simulated and real-world environments!

[2025/02/07] One paper, DreamDistribution for generating diverse customized images, is accepted by ICLR 2025. Code released.

[2025/01/06] We have released Cosmos, a world foundation model platform for physical AI!

[2024/11/11] We have released Edify 3D, a cutting-edge technology for 3D generation!

[2024/11/11] We have released Edify Image, a family of diffusion models for photorealistic image generation!

[2024/7/30] GenUSD is proudly featured in the Real-Time Live! at SIGGRAPH 2024 .

[2024/5/15] One paper, BEHAVIOR Vision Suite for Customizable Dataset Generation, is accepted by CVPR 2024 (Highlight). Code released.

[2024/5/1] One paper, Visual Fact Checker, LLM Agent Tool Use for 2D/3D captioning, is accepted by CVPR 2024.

[2023/12/21] We release the paper and code of DreamDistribution for personalized 2D/3D generation.

[2023/12/18] Starting a new journey at NVIDIA Research as a Research Scientist.

[2023/09/23] One paper on 3D Copy-Paste is accepted by NeurIPS 2023.

[2023/07/26] One paper on Lifelong (Continual) Learning is accepted by ICCV 2023.

[2023/05/09] One paper on Shared Knowledge Lifelong Learning, a new Lifelong Learning paradigm, is accepted by TMLR.

[2023/02/27] One paper on Multi-modal models' Robustness and Generalization is accepted by CVPR 2023.

[2022/12/01] Starting a new journey at Stanford Vision and Learning (SVL) Lab as a Visiting Student Researcher, advised by Prof. Jiajun Wu.

[2022/08/16] I was awarded the Amazon ML Fellowship (2022-2023), and will be an Amazon Fellow at USC + Amazon Center on Secure & Trusted Machine Learning. Thank you Amazon!

[2022/08/16] One paper on Disentangled and Convex Representation learning is accepted by WACV 2023, code is coming soon.

[2022/07/03] Two papers on NeRF and Humanoid Neural Network are accepted by ECCV 2022, code are released.

[2022/05/31] I will be joining Google Research as a student researcher, advised by Dr. Jiaping Zhao , Dr. Jie Ren , Dr. Balaji Lakshminarayanan and Prof. Ming-Hsuan Yang.

[2022/01/20] Finally passed my qual exam and officially became a PhD Candidate now.

[2021/08/23] I will be joining Google Cloud AI as a student researcher, advised by Dr. Sercan Arik and Dr. Jinsung Yoon

[2021/07/15] USC News , Tech Xplore , Technology Networks and other media pressed our ICLR 2021 paper: Group-Supervised Learning (Enabling the 'imagination' of artificial intelligence)

[2021/05/17] I will be joining Computer Vision Group at Microsoft Research Redmond as a research intern in summer 2021, advised by Dr. Vibhav Vineet and Dr. Neel Joshi

[2021/04/07] Releasing Img2SceneGraph, a pipeline that transfers images to scene graphs with node attributes! Welcome to Download and try!

[2021/04/02] One paper (Graph Autoencoder for Graph Compression and Representation Learning) was accepted by Neural Compression Workshop @ICLR 2021 as Spotlight!

[2021/02/28] One paper (A Peek Into the Reasoning of Neural Networks: Interpreting with Structural Visual Concepts) was accepted by CVPR 2021!

[2021/01/16] One paper (Beneficial Perturbation Network for designing general adaptive artificial intelligence systems) was accepted by TNNLS!

[2021/01/12] One paper (Zero-shot Synthesis with Group-Supervised Learning) was accepted by ICLR 2021!

[2020/09/14] Fonts dataset was proposed for fast testing and idea iteration on disentangled representation learning and zero-shot synthesis. Welcome to Download and try!

[2020/07/02] One paper (Pose Augmentation: Class-agnostic Object Pose Transformation) was accepted by ECCV 2020!

[2020/05/12] I will be joining UII America as a research intern in summer 2020, advised by Dr. Ziyan Wu and Dr. Srikrishna Karanam

[2019/08/12] I will be joining USC CS Ph.D. Program in fall 2019, advised by Prof. Laurent Itti.

[2019/07/01] One paper (Synthesis and inpainting-based MR-CT registration) was accepted by MICCAI 2019.

[2019/03/01] One paper (Unpaired Whole-Body Mr to CT Synthesis) was accepted by ISBI 2019.

World Action Model:

DreamZero |

Cosmos Policy

VLA:

GR00T N1.6

Pre-training:

Cosmos Predict2 |

Cosmos-Predict1

Post-training:

Cosmos-Transfer1

Generation:

I-Scene |

GenUSD |

Edify3D |

Edify Image |

BEHAVIOR Vision Suite |

3D Copy Paste |

Scenethesis |

ArtiScene

Understanding:

Visual Fact Checker |

Describe Anything

|

DreamZero: World Action Models are Zero-shot Policies Seonghyeon Ye†, Yunhao Ge*, Kaiyuan Zheng*, Shenyuan Gao*, Sihyun Yu*, George Kurian*, Suneel Indupuru*, You Liang Tan*, Chuning Zhu, Jiannan Xiang, Ayaan Malik, Kyungmin Lee, William Liang, Nadun Ranawaka, Jiasheng Gu, Yinzhen Xu, Guanzhi Wang, Fengyuan Hu, Avnish Narayan, Johan Bjorck, Jing Wang, Gwanghyun Kim, Dantong Niu, Ruijie Zheng, Yuqi Xie, Jimmy Wu, Qi Wang, Ryan Julian, Danfei Xu, Yilun Du, Yevgen Chebotar, Scott Reed, Jan Kautz, Yuke Zhu†, Linxi "Jim" Fan†, Joel Jang† (*=Core Contributors, †=Project Lead) [paper] [project page] [code] [huggingface] |

|

|

Cosmos-Policy: Cosmos-powered Multi-agent Policy Model for Physical AI Moo Jin Kim, Yihuai Gao, Tsung-Yi Lin, Yen-Chen Lin, Yunhao Ge, Grace Lam, Percy Liang, Shuran Song, Ming-Yu Liu, Chelsea Finn, Jinwei Gu ICLR 2026. [paper] [project page] [code] [huggingface] Top 2% of submissions by average score at ICLR 2026 |

|

|

GR00T N1.6: An Improved Open Foundation Model for Generalist Humanoid Robots NVIDIA (Yunhao Ge: core contributor) [research blog] [code] [huggingface] |

|

|

I-Scene: 3D Instance Models are Implicit Generalizable Spatial Learners Lu Ling, Yunhao Ge, Yichen Sheng, Aniket Bera CVPR 2026. [paper] [project page] [code] [huggingface] |

|

Cosmos-Predict2.5 and Cosmos-Transfer2.5: Improved World Simulation with Video Foundation Models for Physical AI NVIDIA (Yunhao Ge: core contributor) [paper] [project page] [code] [huggingface] |

|

|

Cosmos-Transfer1: Conditional World Generation with Adaptive Multimodal Control NVIDIA (Yunhao Ge: core contributor) [paper] [project page] [code] [huggingface] [video] |

|

|

Cosmos: World Foundation Model Platform for Physical AI NVIDIA (Yunhao Ge: core contributor) Best AI + Best overall of CES 2025 [paper] [project page] [code] [huggingface] [video] [Demo API] |

|

|

Describe Anything: Detailed Localized Image and Video Captioning Long Lian, Yifan Ding, Yunhao Ge, Sifei Liu, Hanzi Mao, Boyi Li, Marco Pavone, Ming-Yu Liu, Trevor Darrell, Adam Yala, Yin Cui ICCV 2025. [paper] [code] [project page] [demo] |

|

|

Edify 3D: Scalable High-Quality 3D Asset Generation NVIDIA (Yunhao Ge: core contributor) [paper] [project page] [video] |

|

|

Edify Image: High-Quality Image Generation with Pixel Space Laplacian Diffusion Models NVIDIA (Yunhao Ge: core contributor) [paper] [project page] [video] |

|

GenUSD: 3D Scene Generation Made Easy Jiashu Xu, Yunhao Ge, Yifan Ding, Yin Cui, Chen-Hsuan Lin, Xiaohui Zeng, Zekun Hao, Zhaoshuo Li, Donglai Xiang, Qianli Ma, Fangyin Wei, JP Lewis, Qinsheng Zhang, Seungjun Nah, Arun Mallya, Jingyi Jin, Hanzi Mao, Yen-Chen Lin, Pooya Jannaty, Tsung-Yi Lin, Ming-Yu Liu ACM SIGGRAPH Real-Time Live! 2024. [paper] [project page] |

|

|

BEHAVIOR Vision Suite: Customizable Dataset Generation via Simulation Yunhao Ge*, Yihe Tang*, Jiashu Xu*, Cem Gokmen*, Chengshu Li, Wensi Ai, Benjamin Jose Martinez, Arman Aydin, Mona Anvari, Ayush K Chakravarthy, Hong-Xing Yu, Josiah Wong, Sanjana Srivastava, Sharon Lee, Shengxin Zha, Laurent Itti, Yunzhu Li, Roberto Martín-Martín, Miao Liu, Pengchuan Zhang, Ruohan Zhang, Li Fei-Fei, Jiajun Wu (*=equal contribution) CVPR 2024 [paper] [code] [project page] [tools] Highlight (top 12% of all accepted papers) |

|

Visual Fact Checker: Enabling High-Fidelity Detailed Caption Generation Yunhao Ge, Xiaohui Zeng, Jacob Samuel Huffman, Tsung-Yi Lin, Ming-Yu Liu, Yin Cui CVPR 2024 [paper] [video] [project page] |

|

DreamDistribution: Prompt Distribution Learning for Text-to-Image Diffusion Models Brian Nlong Zhao, Yuhang Xiao*, Jiashu Xu*, Xinyang Jiang, Yifan Yang, Dongsheng Li, Laurent Itti, Vibhav Vineet†, Yunhao Ge† (*=co-second author, †=equal contribution) ICLR 2025. [paper] [code] [project page] |

|

|

3D Copy-Paste: Physically-Plausible Object Insertion for Monocular 3D Detection Yunhao Ge, Hong-Xing Yu, Cheng Zhao, Yuliang Guo, Xinyu Huang, Liu Ren, Laurent Itti, Jiajun Wu NeurIPS 2023 [paper] [code] [project page] |

|

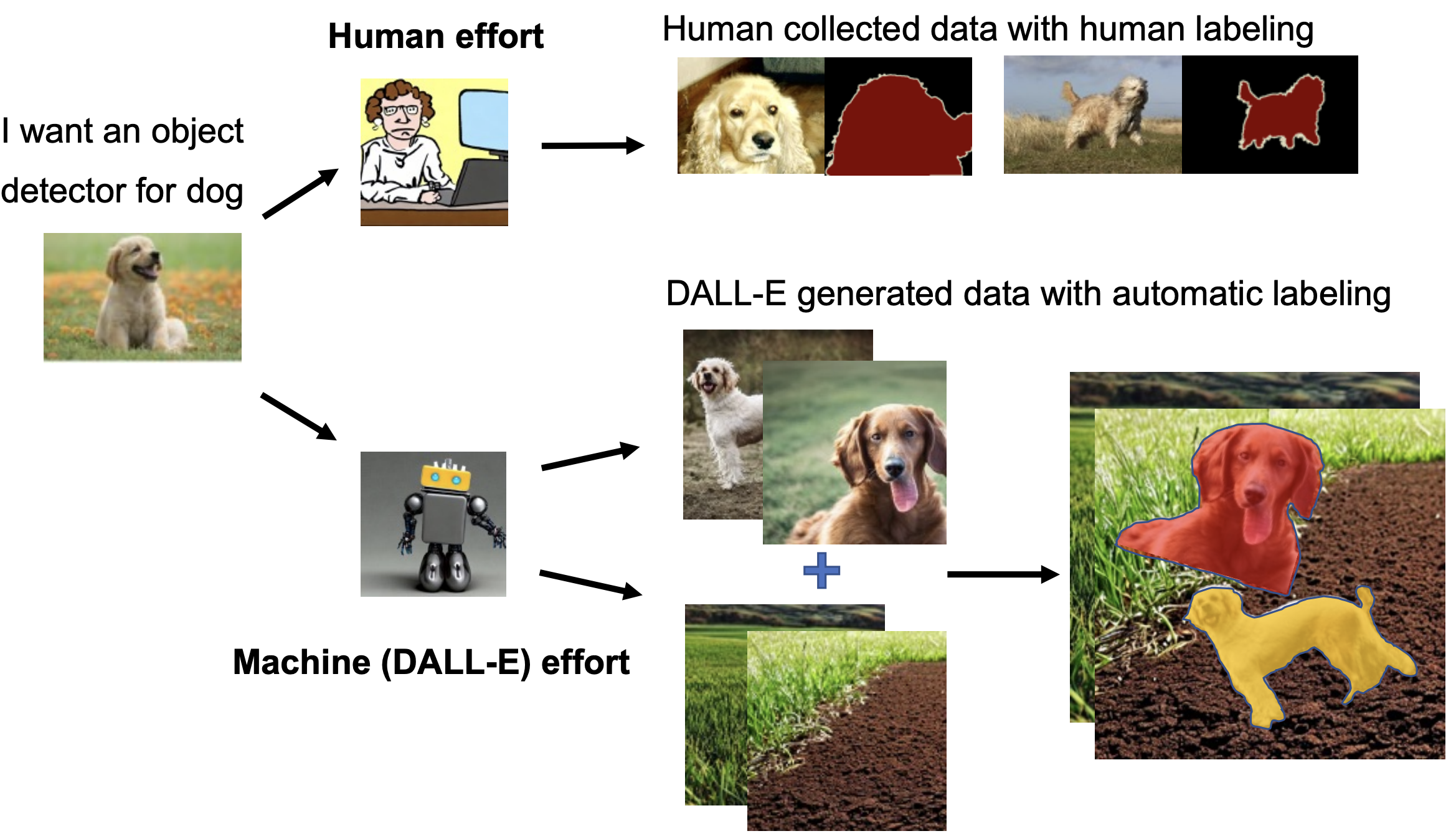

DALL-E for Detection: Language-driven Compositional Image Synthesis for Object Detection Beyond Generation: Harnessing Text to Image Models for Object Detection and Segmentation Yunhao Ge*, Jiashu Xu*, Brian Nlong Zhao, Neel Joshi, Laurent Itti, Vibhav Vineet (*=equal contribution) [paper(Beyond Generation)] [paper(DALL-E for Detection)] [code] |

|

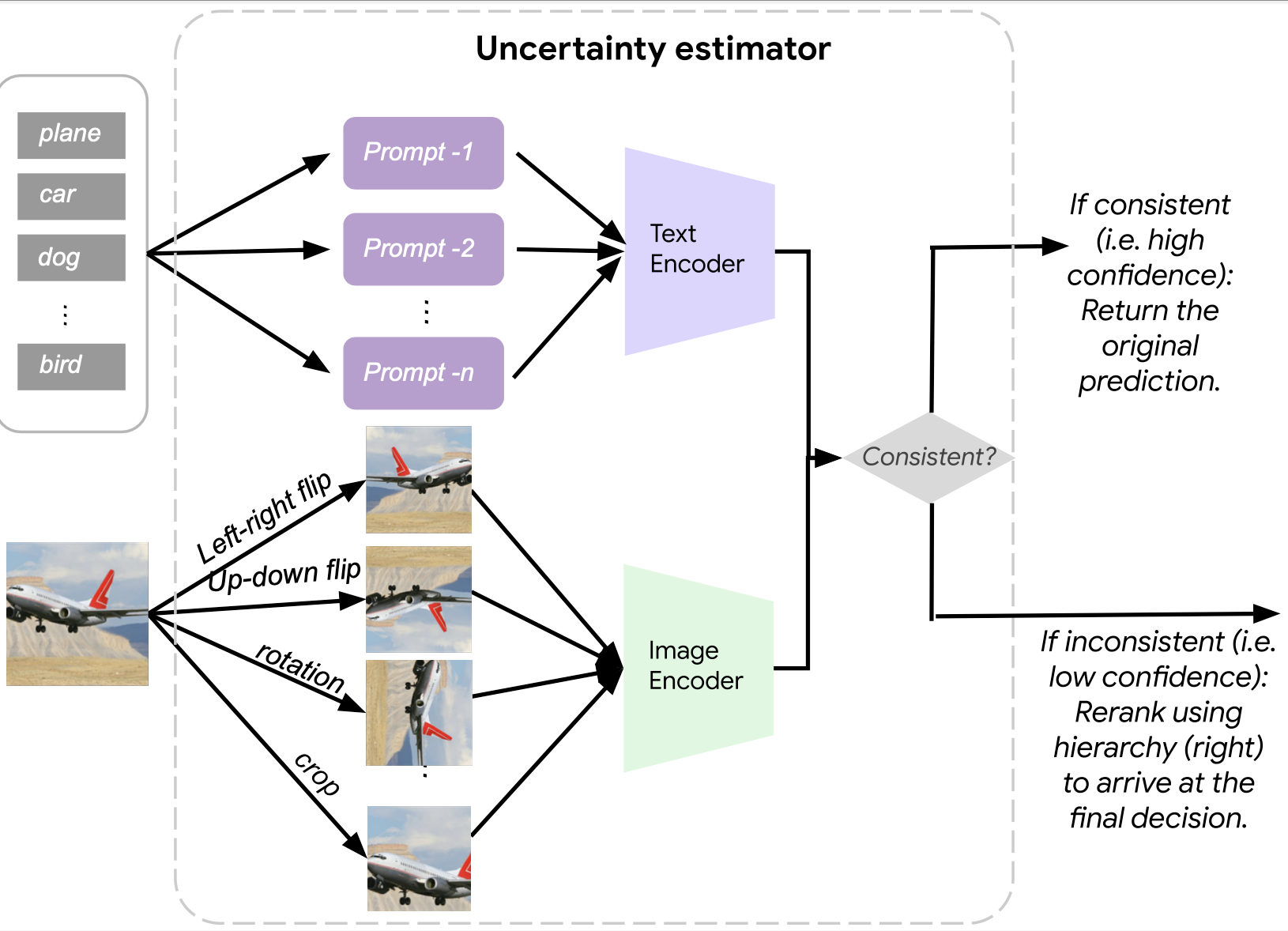

Improving Zero-shot Generalization and Robustness of Multi-modal Models Yunhao Ge*, Jie Ren*, Andrew Gallagher, Yuxiao Wang, Ming-Hsuan Yang, Hartwig Adam, Laurent Itti, Balaji Lakshminarayanan, and Jiaping Zhao (*=equal contribution) CVPR 2023 [paper] [code] [project page] |

|

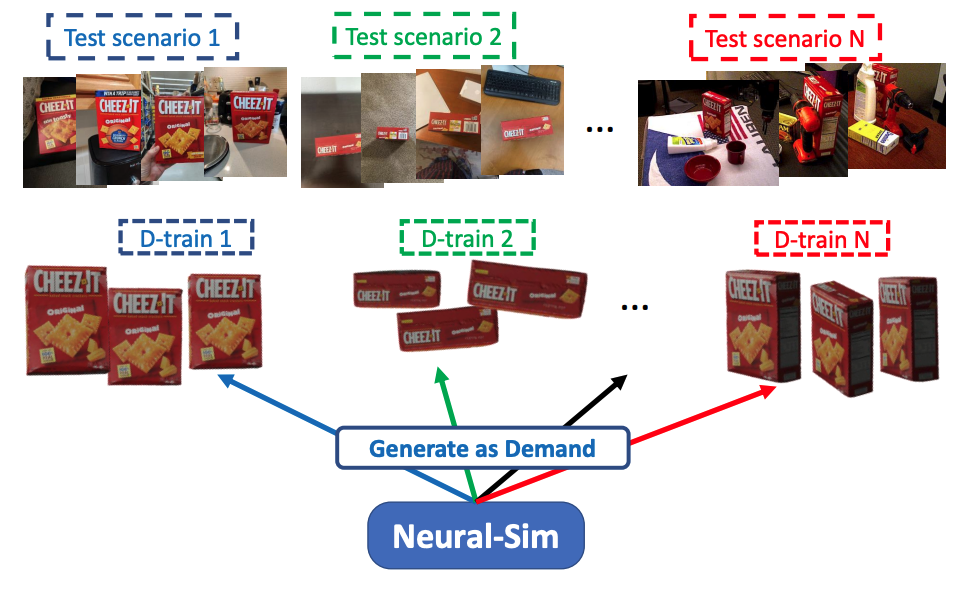

Neural-Sim: Learning to Generate Training Data with NeRF Yunhao Ge, Harkirat Behl*, Jiashu Xu*, Suriya Gunasekar, Neel Joshi, Yale Song, Xin Wang, Laurent Itti, and Vibhav Vineet (*=equal contribution as second author) ECCV 2022 |

|

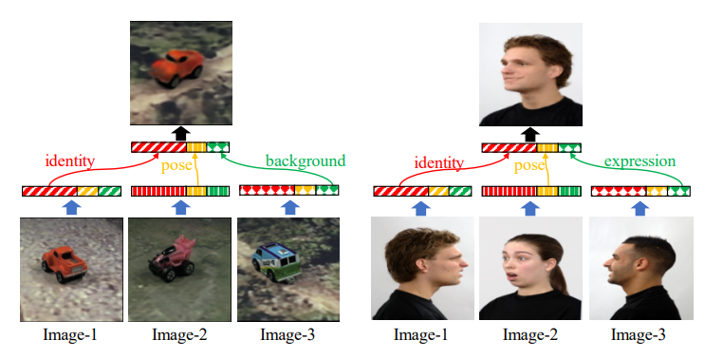

Zero-shot Synthesis with Group-Supervised Learning Yunhao Ge, Sami Abu-El-Haija, Gan Xin and Laurent Itti ICLR 2021 [paper]

[code]

[project page]

[Fonts Dataset]

[USC Viterbi Press]

[知乎]

[AI科技评论] |

Last update: February 22, 2026